20

Chapter Outline

- Introduction to qualitative rigor (13 minute read)

- Ethical responsibility and cultural respectfulness (4 minute read)

- Critical considerations (6 minute read)

- Data capture: Striving for accuracy in our raw data (6 minute read)

- Data management: Keeping track of our data and our analysis (8 minute read)

- Tools to account for our influence (22 minute read)

Content warning: Examples in this chapter contain references to fake news, mental health treatment, peer-support, misrepresentation, equity and (dis)honesty in research.

We hear a lot about fake news these days. Fake news has to do with the quality of journalism that we are consuming. It begs questions like: does it contain misinformation, is it skewed or biased in its portrayal of stories, does it leave out certain facts while inflating others. If we take this news at face value, our opinions and actions may be intentionally manipulated by poor quality information. So, how do we avoid or challenge this? The oversimplified answer is, we find ways to check for quality. While this isn’t a chapter dedicated to fake news, it does offer an important comparison for the focus of this chapter, rigor in qualitative research. Rigor is concerned with the quality of research that we are designing and consuming. I want you to be judgmental, critical thinkers about research! As an educator who will hopefully be producing research (we need you!) and definitely consuming research, you need to be able to differentiate good science from rubbish science. Rigor will help you to do this.

This chapter will introduce you to the concept of rigor and specifically, what it looks like in qualitative research. We will begin by considering how rigor relates to issues of ethics and how thoughtfully involving community partners in our research can add additional dimensions in planning for rigor. Next, we will look at rigor in how we capture and manage qualitative data, essentially helping to ensure that we have quality raw data to work with for our study. Finally, we will devote time to discussing how researchers, as human instruments, need to maintain accountability throughout the research process. Finally, we will examine tools that encourage this accountability and how they can be integrated into your research design. Our hope is that by the end of this chapter, you will begin to be able to identify some of the hallmarks of quality in qualitative research, and if you are designing a qualitative research proposal, that you consider how to build these into your design.

20.1 Introduction to qualitative rigor

Learning Objectives

Learners will be able to…

- Identify the role of rigor in qualitative research and important concepts related to qualitative rigor

- Discuss why rigor is an important consideration when conducting, critiquing and consuming qualitative research

- Differentiate between quality in quantitative and qualitative research studies

In Chapter 11 we talked about quality in quantitative studies, but we built our discussion around concepts like reliability and validity. With qualitative studies, we generally think about quality in terms of the concept of rigor. The difference between quality in quantitative research and qualitative research extends beyond the type of data (numbers vs. words/sounds/images). If you sneak a peek all the way back to Chapter 7, we discussed the idea of different paradigms or fundamental frameworks for how we can think about the world. These frameworks value different kinds of knowledge, arrive at knowledge in different ways, and evaluate the quality of knowledge with different criteria. These differences are essential in differentiating qualitative and quantitative work.

Quantitative research generally falls under a positivist paradigm, seeking to uncover knowledge that holds true across larger groups of people. To accomplish this, we need to have tools like reliability and validity to help produce internally consistent and externally generalizable findings (i.e. was our study design dependable and do our findings hold true across our population).

In contrast, qualitative research is generally considered to fall into an alternative paradigm (other than positivist), such as the interpretive paradigm which is focused on the subjective experiences of individuals and their unique perspectives. To accomplish this, we are often asking participants to expand on their ideas and interpretations. A positivist tradition requires the information collected to be very focused and discretely defined (i.e. closed questions with prescribed categories). With qualitative studies, we need to look across unique experiences reflected in the data and determine how these experiences develop a richer understanding of the phenomenon we are studying, often across numerous perspectives.

Rigor is a concept that reflects the quality of the process used in capturing, managing, and analyzing our data as we develop this rich understanding. Rigor helps to establish standards through which qualitative research is critiqued and judged, both by the scientific community and by the practitioner community.

For the scientific community, people who review qualitative research studies submitted for publication in scientific journals or for presentations at conferences will specifically look for indications of rigor, such as the tools we will discuss in this chapter. This confirms for them that the researcher(s) put safeguards in place to ensure that the research took place systematically and that consumers can be relatively confident that the findings are not fabricated and can be directly connected back to the primary sources of data that was gathered or the secondary data that was analyzed.

As a note here, as we are critiquing the research of others or developing our own studies, we also need to recognize the limitations of rigor. No research design is flawless and every researcher faces limitations and constraints. We aren’t looking for a researcher to adopt every tool we discuss below in their design. In fact, one of my mentors, speaks explicitly about “misplaced rigor”, that is, using techniques to support rigor that don’t really fit what you are trying to accomplish with your research design. Suffice it to say that we can go overboard in the area of rigor and it might not serve our study’s best interest. As a consumer or evaluator of research, you want to look for steps being taken to reflect quality and transparency throughout the research process, but they should fit within the overall framework of the study and what it is trying to accomplish.

From the perspective of a practitioner, we also need to be acutely concerned with the quality of research. Education as a field has made a commitment to competent practice in service to our students based on standards of practice informed by research and scholarship. When I think about my own teachers, I hope (and believe) they were using “good” research—research that we can be confident was conducted in a credible way and whose findings are honestly and clearly represented. Don’t our students deserve the same from us?

As educators, we will be looking to qualitative research studies to provide us with information that helps us better understand our students, their experiences, and the problems they encounter. As such, we need to look for research that accurately represents:

- Who is participating in the study

- What circumstances is the study being conducted under

- What is the research attempting to determine

Further, we want to ensure that:

- Findings are presented accurately and reflect what was shared by participants (raw data)

- A reasonably good explanation of how the researcher got from the raw data to their findings is presented

- The researcher adequately considered and accounted for their potential influence on the research process

As we talk about different tools we can use to help establish qualitative rigor, I will try to point out tips for what to look for as you are reading qualitative studies that can reflect these. While rigor can’t “prove” quality, it can demonstrate steps that are taken that reflect thoughtfulness and attention on the part of the researcher(s). This is a link from the American Psychological Association on the topic of reviewing qualitative research manuscripts. It’s a bit beyond the level of critiquing that I would expect from a beginning qualitative research student, however, it does provide a really nice overview of this process. Even if you aren’t familiar with all the terms, I think it can be helpful in giving an overview of the general thought process that should be taking place.

To begin breaking down how to think about rigor, I find it helpful to have a framework to help understand different concepts that support or are associated with rigor. Lincoln and Guba (1985) have suggested such a framework for thinking about qualitative rigor that has widely contributed to standards that are often employed for qualitative projects. The overarching concept around which this framework is centered is trustworthiness. Trustworthiness is reflective of how much stock we should put in a given qualitative study—is it really worth our time, headspace, and intellectual curiosity? A study that isn’t trustworthy suggests poor quality resulting from inadequate forethought, planning, and attention to detail in how the study was carried out. This suggests that we should have little confidence in the findings of a study that is not trustworthy.

According to Lincoln and Guba (1985)[1] trustworthiness is grounded in responding to four key ideas and related questions to help you conceptualize how they relate to your study. Each of these concepts is discussed below with some considerations to help you to compare and contrast these ideas with more positivist or quantitative constructs of research quality.

Truth value

You have already been introduced to the concept of internal validity. As a reminder, establishing internal validity is a way to ensure that the change we observe in the dependent variable is the result of the variation in our independent variable—did we actually design a study that is truly testing our hypothesis. In much/most qualitative studies we don’t have hypotheses, independent or dependent variables, but we do still want to design a study where our audience (and ourselves) can be relatively sure that we as the researcher arrived at our findings through a systematic and scientific process, and that those findings can be clearly linked back to the data we used and not some fabrication or falsification of that data; in other words, the truth value of the research process and its findings. We want to give our readers confidence that we didn’t just make up our findings or “see what we wanted to see”.

Applicability

- who we were studying

- how we went about studying them

- what we found

Consistency

Neutrality

These concepts reflect a set of standards that help to determine the integrity of qualitative studies. At the end of this chapter you will be introduced to a range of tools to help support or reflect these various standards in qualitative research. Because different qualitative designs (e.g. phenomenology, narrative, ethnographic), that you will learn more about in Chapter 22 emphasize or prioritize different aspects of quality, certain tools will be more appropriate for these designs. Since this chapter is intended to give you a general overview of rigor in qualitative studies, exploring additional resources will be necessary to best understand which of these concepts are prioritized in each type of design and which tools best support them.

Key Takeaways

- Qualitative research is generally conducted within an interpretativist paradigm. This differs from the post-positivist paradigm in which most quantitative research originates. This fundamental difference means that the overarching aim of these different approaches to knowledge building differ, and consequently, our standards for judging the quality of research within these paradigms differ.

- Assessing the quality of qualitative research is important, both from a researcher and a practitioner perspective. On behalf of our clients and our profession, we are called to be critical consumers of research. To accomplish this, we need strategies for assessing the scientific rigor with which research is conducted.

- Trustworthiness and associated concepts, including credibility, transferablity, dependability and confirmability, provide a framework for assessing rigor or quality in qualitative research.

20.2 Ethical responsibility and cultural respectfulness

Learning Objectives

Learners will be able to…

- Discuss the connection between rigor and ethics as they relate to the practice of qualitative research

- Explain how the concepts of accountability and transparency lay an ethical foundation for rigorous qualitative research

The two concepts of rigor and ethics in qualitative research are closely intertwined. It is a commitment to ethical research that leads us to conduct research in rigorous ways, so as not to put forth research that is of poor quality, misleading, or altogether false. Furthermore, the tools that demonstrate rigor in our research are reinforced by solid ethical practices. For instance, as we build a rigorous protocol for collecting interview data, part of this protocol must include a well-executed, ethical informed consent process; otherwise, we hold little hope that our efforts will lead to trustworthy data. Both ethics and rigor shine a light on our behaviors as researchers. These concepts offer standards by which others can critique our commitment to quality in the research we produce. They are both tools for accountability in the practice of research.

Related to this idea of accountability, rigor requires that we promote a sense of transparency in the qualitative research process. We will talk extensively in this chapter about tools to help support this sense of transparency, but first, I want to explore why transparency is so important for ethical qualitative research. As social workers, our own knowledge, skills, and abilities to help serve our clients are our tools. Similarly, qualitative research demands the social work researcher be an actively involved human instrument in the research process.

While quantitative researchers also makes a commitment to transparency, they may have an easier job of demonstrating it. Let’s just think about the data analysis stage of research. The quantitative researcher has a data set, and based on that data set there are certain tests that they can run. Those tests are mathematically defined and computed by statistical software packages and we have established guidelines for interpreting the results and reporting the findings. There is most certainly tremendous skill and knowledge exhibited in the many decisions that go into this analysis process; however, the rules and requirements that lay the foundation for these mathematical tests mean that much of this process is prescribed for us. The prescribed procedures offer quantitative researchers a shorthand for talking about their transparency.

In comparison, the qualitative researcher, sitting down with their data for analysis will engage in a process that will require them to make hundreds or thousands of decisions about what pieces of data mean, what label they should have, how they relate to other ideas, what the larger significance is as it relates to their final results. That isn’t to say that we don’t have procedures and processes as qualitative researchers, we just can’t rely on mathematics to make these decisions precise and clear. We have to rely on ourselves as human instruments. Adopting a commitment to transparency in our research as qualitative researchers means that we are actively describing for our audience the role we have as human instruments and we consider how this is shaping the research process. This allows us to avoid unethically representing what we did in our research process and what we found.

I think that as researchers we can sometimes think of data as an object that is not inherently valuable, but rather a means to an end. But if we see qualitative data as part of sacred stories that are being shared with us, doesn’t it feel like a more precious resource? Something worthy of thoughtfully and even gently gathering, something that needs protecting and safe-keeping. Adhering to a rigorous research process can help to honor these commitments and avoid the misuse of data as a precious resource. Thinking like this will hopefully help us to demonstrate greater cultural humility as social work researchers.

Key Takeaways

- Ethics and rigor both are interdependent and call attention to our behaviors as researchers and the quality and care with which our research is conducted.

- Accountability and transparency in qualitative research helps to demonstrate that as researchers we are acting with integrity. This means that we are clear about how we are conducting our research, what decisions we are making during the research process, and how we have arrived at these decisions.

Exercises

While this activity is early in the chapter, I want you to consider for a few moments about how accountability relates to your research proposal.

20.3 Critical considerations

Learning Objectives

Learners will be able to…

- Identify some key questions for a critical critique of research planning and design

- Differentiate some alternative standards for rigor according to more participatory research approaches

As I discussed above, rigor shines a spotlight on our actions as researchers. A critical perspective is one that challenges traditional arrangements of power, control and the role of structural forces in maintaining oppression and inequality in society. From this perspective, rigor takes on additional meaning beyond the internal integrity of the qualitative processes used by you or I as researchers, and suggest that standards of quality need to address accountability to our participants and the communities that they represent, NOT just the scientific community. There are many evolving dialogues about what criteria constitutes “good” research from critical traditions, including participatory and empowerment approaches that have their roots in critical perspective. These discussions could easily stand as their own chapter, however, for our purposes, we will borrow some questions from these critical debates to consider how they might inform the work we do as qualitative researchers.

Who gets to ask the questions?

In the case of your research proposal, chances are you are outlining your research question. Because our research question truly drives our research process, it carries a lot of weight in the planning and decision-making process of research. In many instances, we bring our fully-formed research projects to participants, and they are only involved in the collection of data. But critical approaches would challenge us to involve people who are impacted by issues we are studying from the onset. How can they be involved in the early stages of study development, even in defining our question? If we treat their lived experience as expertise on the topic, why not start early using this channel to guide how we think about the issue? This challenges us to give up some of our control and to listen for the “right” question before we ask it.

Who owns the data and the findings?

Answering this question from a traditional research approach is relatively clear—the researcher or rather, the university or research institution they represent. However, critical approaches question this. Think about this specifically in terms of qualitative research. Should we be “owning” pieces of other people’s stories, since that is often the data we are working with? What say do people get in what is done with their stories and the findings that are derived from them? Unfortunately, there aren’t clear answers. These are some critical questions that we need to struggle with as qualitative researchers.

- How can we disrupt or challenge current systems of data ownership, empowering participants to maintain greater rights?

- What could more reciprocal research ownership arrangments look like?

- What are the benefits and consequences of disrupting this system?

- What are the benefits and consequences of perpetuating our current system?

What is the sustained impact of what I’m doing?

As qualitative researchers, our aim is often exploring meaning and developing understanding of social phenomena. However, criteria from more critical traditions challenge us to think more tangibly and with more immediacy. They require us to answer questions about how our involvement with this specific group of people within the context of this project may directly benefit or harm the people involved. This not only applies in the present but also in the future.

We need to consider questions like:

- How has our interaction shaped participants’ perceptions of research?

- What are the ripple effects left behind from the questions we raised by our study?

- What thoughts or feelings have been reinforced or challenged, both within the community but also for outsiders?

- Have we built/strengthened/damaged relationships?

- Have we expanded/depleted resources for participants?

We need to reflect on these topics in advance and carefully considering the potential ramifications of our research before we begin. This helps to demonstrate critical rigor in our approach to research planning. Furthermore, research that is being conducted in participatory traditions should actively involve participants and other community members to define what the immediate impacts of the research should be. We need to ask early and often, what do they need as a community and how can research be a tool for accomplishing this? Their answers to these questions then become the criteria on which our research is judged. In designing research for direct and immediate change and benefit to the community, we also need to think about how well we are designing for sustainable change. Have we crafted a research project that creates lasting transformation, or something that will only be short-lived?

As students and as scholars we are often challenged by constraints as we address issues of rigor, especially some of the issues raised here. One of the biggest constraints is time. As a student, you are likely building a research proposal while balancing many demands on your time. To actively engage community members and to create sustainable research projects takes considerable time and commitment. Furthermore, we often work in highly structured systems that have many rules and regulations that can make doing things differently or challenging convention quite hard. However, we can begin to make a more equity-informed research agenda by:

- Reflecting on issues of power and control in our own projects

- Learning from research that models more reciprocal relationships between researcher and researched

- Finding new and creative ways to actively involve participants in the process of research and in sharing the benefits of research

In the resource box below, you will find links for a number of sources to learn more about participatory research methods that embody the critical perspective in research that we have been discussing.

As we turn our attention to rigor in the various aspects of the qualitative research process, continue to think about what critical criteria might also apply to each of these areas.

Key Takeaways

- Traditional research methods, including many qualitative approaches, may fail to challenge the ways that the practice of research can disenfranchise and disempower individauls and communities.

- Researchers from critical perspectives often question the power arrangments, roles, and objectives of more traditional research methods, and have been developing alternatives such as participatory research approaches. These participatory approaches engage participants in much more active ways and furthermore, they evaluate the quality of research by the direct and sustained benefit that it brings to participants and their communities.

Resources

Bergold, J., & Thomas, S. (2012). Participatory research methods: A methodological approach in motion

Center for Community Health and Development, University of Kansas. (n.d.). Community toolbox: Section.2 Community-based participatory research

New Tactics in Human Rights. (n.d.). Participatory research for action.

Pain et al. (2010). Participatory action research toolkit: An introduction to using PAR as an approach to learning, research and action.

Participate. (n.d.). Participatory research methods.

20.4 Data capture: Striving for accuracy in our raw data

Learning Objectives

Learners will be able to…

- Explain the importance of paying attention to the data collection process for ensuring rigor in qualitative research

- Identify key points that they will need to consider and address in developing a plan for gathering data to support rigor in their study

It is very hard to make a claim that research was conducted in a rigorous way if we don’t start with quality raw data. That is to say, if we botch our data collection, we really can’t produce trustworthy findings, no matter how good our analysis is. So what is quality raw data? From a qualitative research perspective, quality raw data means that the data we capture provides an accurate representation of what was shared with us by participants or through other data sources, such as documents. This section is meant to help you consider rigor as it pertains to how you capture your data. This might mean how you document the information from your interviews or focus groups, how you record your field notes as you are conducting observations, what you track down and access for other artifacts, or how you produce your entries in your reflexive journal (as this can become part of your data, as well).

This doesn’t mean that all your data will look the same. However, you will want to anticipate the type(s) of data you will be collecting and what format they will be in. In addition, whenever possible and appropriate, you will want the data you collect to be in a consistent format. So, if you are conducting interviews and you decide that you will be capturing this data by taking field notes, you will use a similar strategy for gathering information at each interview. You would avoid using field notes for some, recording and transcribing others, and then having emailed responses from the remaining participants. You might be wondering why this matters, after all, you are asking them the same questions. However, using these different formats to capture your data can make your data less comparable. This may have led to different information being shared by the participant and different information being captured by the researcher. For instance, if you rely on email responses, you lose the ability to follow up with probing questions you may have introduced in an in-person interview. Those participants who were recorded may not have felt as free to share information when compared to those interviews where you took field notes. It becomes harder to know if variation in your data is due to diversity in peoples’ experiences or just differences in how you went about capturing your data. Now we will turn our attention to quality in different types of data.

As qualitative researchers, we often are dealing with written data. At times, it may be participants who are doing the writing. We may ask participants to provide written responses to questions or we may use writing samples as artifacts that are produced for some other purpose that we have permission to include in our study. In either case, ideally we are including this written data with as little manipulation as possible. If we do things like take passages or ideas out of context or interpret segments in our own words, we run a much greater risk of misrepresenting the data that is being shared with us. This is a direct threat to rigor, compromising the quality of the raw data we are collecting. If we need to clarify what a participant means by one of their responses and we have the opportunity to follow up with them, we want to capture their own words as closely as we can when they provide their explanation. This is also true if we ask participants to provide us with drawings. For instance, we may ask youth to provide a drawn response to a question as an age-appropriate way to respond to a question, but we might follow-up by asking them to explain their drawing to us. We would want to capture their description as close to their own words as possible, including both the drawing and the description in our data.

Researchers may also be responsible for producing written data. Rigorous field notes strive to capture participants’ words as accurately as possible, which usually means quoting more and paraphrasing less. Of course we can’t avoid paraphrasing altogether (unless you have incredible shorthand skills, which I definitely do not), but the more interpreting or filtering we do as we capture our data, the less trustworthy it becomes. You also want to stick to a consistent method of recording your field notes. It becomes much harder to analyze your data if you have one system one day and another system another day. The quality of the notes may differ greatly and differences in organization may make it challenging to compare across your data. Finally, rigorous field notes usually capture context, as well. If you are gathering field notes for an interview or during a focus group, this may mean that you take note of non-verbal information during the exchange. If you are conducting an observation, your field notes might contain detailed information about the setting and circumstances of the observation.

As qualitative researchers, we may also be working with audio, video, or other forms of media data. Much of what we have already discussed in respect to written data also applies to these data formats, as well. The less we manipulate or change the original data source, the better. For example, if you have an audio recording of your focus group, you want your transcript to be as close to verbatim as possible. Also, if we are working with a visual or aural medium, like a performance, capturing context and description—including audience reactions—with as much detail as possible is vital if we are looking to analyze the meaning of such an event or experience.

This topic shouldn’t require more than a couple sentences as you write up your research proposal. However, these sentences should reflect some careful forethought and planning. Remember, this is the hand-off! If you are a relay runner, this is the point where the baton gets passed as the participant or source transfers information to the study. Also, you want to ensure that you select a strategy that can be consistent and part of systematic process. Now we need to come up with a plan for managing our data.

Examples

Data will be collected using semi-structured interviews. Interviews will be digitally recorded and transcribed verbatim. In addition, the researcher will take field notes during each interview (see field note template, appendix A).

As they are gathered, documents will be assigned a study identification number. Along with their study ID, a brief description of the document, its source, and any other historical information will be kept in the data tracking log (see data tracking log, appendix B).

Key Takeaways

- Anticipating and planning for how you will systematically and consistently gather your data is crucial for a rigorous qualitative research project.

- When conducting qualitative research, we not only need to consider the data that we collect from other sources, but the data that we produce ourselves. As human instruments in the research process, our reaction to the data also becomes a form of data that can shape our findings. As such, we need to think about how we can capture this as well.

Exercises

20.5 Data management: Keeping track of our data and our analysis

Learning Objectives

Learners will be able to…

- Explain how data management and data analysis in qualitative projects can present unique challenges or opportunities for demonstrating quality in the research process

- Plan for key elements to address or include in a data management plan that supports qualitative rigor in the study

Elements to think about

Once data collection begins, we need a plan for what we are going to do with it. As we talked about in our chapter devoted to qualitative data collection, this is often an important point of departure between quantitative and qualitative methods. Quantitative research tends to be much more sequential, meaning that first we collect all the data, then we analyze the data. If we didn’t do it this way, we wouldn’t know what numbers we are dealing with. However, with qualitative data, we are usually collecting and beginning to analyze our data simultaneously. This offers us a great opportunity to learn from our data as we are gathering it. However, it also means that if you don’t have a plan for how you are going to manage these dual processes of data collection and data analysis, you are going to get overwhelmed twice as fast! A rigorous process will have a clearly defined process for labeling and tracking your data artifacts, whether they are text documents (e.g. transcripts, newspaper clippings, advertisements), photos, videos, or audio recordings. These may be physical documents, but more often than not, they are electronic. In either case, a clear, documented labeling system is required. This becomes very important because you are going to need to come back to this artifact at some point during your analysis and you need to have a way of tracking it down. Let’s talk a bit more about this.

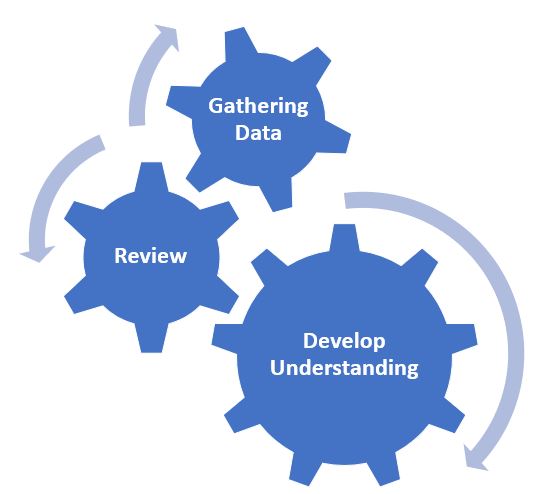

You were introduced to the term iterative process in our previous discussions about qualitative data analysis. As a reminder, an iterative process is one that involves repetition, so in the case of working with qualitative data, it means that we will be engaging in a repeating and evolving cycle of reviewing our data, noting our initial thoughts and reactions about what the data means, collecting more data, and going back to review the data again. Figure 20.1 depicts this iterative process. To adopt a rigorous approach to qualitative analysis, we need to think about how we will capture and document each point of this iterative process. This ishow we demonstrate transparency in our data analysis process, how we detail the work that we are doing as human instruments.

During this process, we need to consider:

- How will we capture our thoughts about the data, including what we are specifically responding to in the data?

- How do we introduce new data into this process?

- How do we record our evolving understanding of the data and what those changes are prompted by?

So we have already talked about the importance of labeling our artifacts, but each artifact is likely to contain many ideas. For instance, think about the many ideas that are shared in a single interview. Because of this, we need to also have a clear and standardized way of labeling smaller segments of data within each artifact that represent discrete or separate ideas. If you recall back to our analysis chapter, these labels are called units. You are likely to have many, many units in each artifact. Additionally, as suggested above, you need a way to capture your thought process as you respond to the data. This documentation is called memoing, a term you were introduced to in our analysis chapter. These various components, labeling your artifacts, labeling your units, and memoing, come together as you produce a rigorous plan for how you document your data analysis. Again, rigor here is closely associated with transparency. This means that you are using these tools to document a clear road map for how you got from your raw data to your findings. The term for this road map is an audit trail, and we will speak more about it in the next section. The test of this aspect of rigor becomes your ability to work backwards, or better yet, for someone else to work backwards. Could someone not connected with your project look at your findings, and using your audit trail, trace these ideas all the way back to specific points in your raw data? The term for this is having an external audit and will also be further explained below. If you can do this, we sometimes say that your findings are clearly “grounded in your data”.

What our plan for data management might look like.

If you are working with physical data, you will need a system of logging and storing your artifacts. In addition, as you break your artifacts down into units you may well be copying pieces of these artifacts onto small note cards or post-its that serve as your data units. These smaller units become easier to manipulate and move around as you think about what ideas go together and what they mean collectively. However, each of these smaller units need a label that links them back to their artifact. But why do I have to go through all this? Well, it isn’t just for the sake of transparency and being able to link your findings back to the original raw data, although that is certainly important. You also will likely reach a point in your analysis where themes are coming together and you are starting to make sense of things. When this occurs, you will have a pile of units from various artifacts under each of these themes. At this point you will want to know where the information in the units came from. If it was verbal data, you will want to know who said it or what source it came from. This offers us important information about the context of our findings and who/what they are connected to. We can’t determine this unless we have a good labeling system.

You will need to come up with a system that makes sense to you and fits for your data. As an example, I’m often working with transcripts from interviews or focus groups. As I am collecting my data, each transcript is numbered as I obtain it. Also, the transcripts themselves have continuous line numbers on them. When I start to break-up or deconstruct my data, each unit gets a label that consists of two numbers separated by a period. The number before the period is the transcript that the unit came from and the number after the period is the line number within that transcript so that I can find exactly where the information is. So, if I have a unit labeled 3.658, it means that this data can be found in my transcript labeled 3 and on line 658.

Now, I often use electronic versions of my transcripts when I break them up. As I showed in our data analysis chapter, I create an excel file where I can cut and paste the data units, their label, and the preliminary code I am assigning to this idea. I find excel useful because I can easily sort my data by codes and start to look for emerging themes. Furthermore, above I mentioned memoing, or recording my thoughts and responses to the data. I can easily do this in excel, by adding an additional column for memoing where I can put my thoughts/responses by a particular unit and date it, so I know when I was having that thought. Generally speaking, I find that excel makes it pretty easy for me to manipulate or move my data around while I’m making sense of it, while also documenting this. Of course, the qualitative data analysis software packages that I mentioned in our analysis chapter all have their own systems for activities such as assigning labels, coding, and memoing. And if you choose to use one of these, you will want to be well acquainted with how to do this before you start collecting data. That being said, you don’t need software or even excel to do this work. I know many qualitative researchers who prefer having physical data in front of them, allowing them to shift note cards around and more clearly visualize their emerging themes. If you elect for this, you just need to make sure you track the moves you are making and your thought process during the analysis. And be careful if you have a cat, mine would have a field day with piles of note cards left on my desk!

Key Takeaways

- Due to the dynamic and often iterative nature of qualitative research, we need to proactively consider how we will store and analyze our qualitative data, often at the same time we are collecting it.

- Whatever data management system we plan for, it needs to have consistent ways of documenting our evolving understanding of what our data mean. This documentation acts as an important bridge between our raw qualitative data and our qualitative research findings, helping to support rigor in our design.

20.6 Tools to account for our influence

Learning Objectives

Learners will be able to…

- Identify key tools for enhancing qualitative rigor at various stages of the research process

- Begin to critique the quality of existing qualitative studies based on the use of these tools

- Determine which tools may strengthen the quality of our own qualitative research designs

So I’ve saved the best for last. This is a concrete discussion about tools that you can utilize to demonstrate qualitative rigor in your study. The previous sections in this chapter suggest topics you need to think about related to rigor, but this suggests strategies to actually accomplish it. Remember, these are tools you should also be looking for as you examine other qualitative research studies. As I previously mentioned, you won’t be looking to use all of these in any one study, but rather determining which tools make the most sense based on your study design.

Some of these tools apply throughout the research process, while others are more specifically applied at one stage of research. For instance, an audit trail is created during your analysis phase, while peer debriefing can take place throughout all stages of your research process. These come to us from the work of Lincoln and Guba (1985).[2] Along with the argument that we need separate criteria for judging the quality of from the interpretivist paradigm (as opposed to positivist criteria of reliability and validity), they also proposed a compendium of tools to help meet these criteria. We will review each of these tools and an example will be provided after the description.

Observer triangulation

Observer triangulation involves including more than one member of your research team to aid in analyzing the data. Essentially, you will have at least two sets of eyes looking at the data, drawing it out, and then comparing findings, converging on agreement about what the final results should be. This helps us to ensure that we aren’t just seeing what we want to see.

Data triangulation

Data triangulation is a strategy that you build into your research design where you include data from multiple sources to help enhance your understanding of a topic. This might mean that you include a variety of groups of people to represent different perspectives on the issue. This can also mean that you collect different types of data. The main idea here is that by incorporating different sources of data (people or types), you are seeking to get a more well-rounded or comprehensive understanding of the focus of your study.

Example.

People: Instead of just interviewing mental health consumers about their treatment, you also include family members and providers.

Types: I have conducted a case study where we included interviews and the analysis of multiple documents, such as emails, agendas, and meeting minutes.

Peer debriefing

Peer debriefing means that you intentionally plan for and meet with a qualitative researcher outside of your team to discuss your process and findings and to help examine the decisions you are making, the logic behind them, and your potential influence and accountability in the research process. You will often meet with a peer debriefer multiple times during your research process and may do things like: review your reflexive journal; review certain aspects of your project, such as preliminary findings; discuss current decisions you are considering; and review the current status of your project. The main focus here is building in some objectivity to what can become a very subjective process. We can easily become very involved in this research and it can be hard for us to step back and thoughtfully examine the decisions we are making.

Member-checking

Member-checking has to do with incorporating research participants into the data analysis process. This may mean actively including them throughout the analysis, either as a co-researcher or as a consultant. This can also mean that once you have the findings from your analysis, you take these to your participants (or a subset of your participants) and ask them to review these findings and provide you feedback about their accuracy. I will often ask participants when I member-check, can you hear your voice in these findings? Do you recognize what you shared with me in these results? We often need to preface member-checking by saying that we are bringing together many people’s ideas, so we are often trying to represent multiple perspectives, but we want to make sure that their perspective is included in there. This can be a very important step in ensuring that we did a reasonable job getting from our raw data to our findings…did we get it right. It also gives some power back to participants, as we are giving them some say in what our findings look like.

Thick description

Providing a thick description means that you are giving your audience a rich, detailed description of your findings and the context in which they exist. As you read a thick description, you walk away feeling like you have a very vivid picture of what the research participants felt, thought, or experienced, and that you now have a more complete understanding of the topic being studied. Of course, a thick description can’t just be made up at the end. You can’t hope to produce a thick description if you haven’t done work early on to collect detailed data and performed a thorough analysis. Our main objective with a thick description is being accountable to our audience in helping them to understand what we learned in the most comprehensive way possible.

Reflexivity

Reflexivity pertains to how we understand and account for our influence, as researchers, on the research process. In social work practice, we talk extensively about our “use of self” as social workers, meaning that we work to understanding how our unique personhood (who we are) impacts or influences how we work with our clients. Reflexivity is about applying this to the process of research, rather than practice. It assumes that our values, beliefs, understanding, and experiences all may influence the decisions that we make as we engage in research. By engaging in qualitative research with reflexivity, we are attempting to be transparent about how we are shaping and being shaped by the research we are conducting.

Prolonged engagement

Prolonged engagement means that we are extensively spending time with participants or are in the community we are studying. We are visiting on multiple occasions during the study in an attempt to get the most complete picture or understanding possible. This can be very important for us as we attempt to analyze and interpret our data. If we haven’t spent enough time getting to know our participants and their community, we may miss the meaning of data that is shared with us because we don’t understand the cultural subtext in which this data exists. The main idea here is that we don’t know what we don’t know; furthermore, we can’t know it unless we invest time getting to know it! There’s no short-cut here, you have to put in the time.

Audit trail

Creating an audit trail is something we do during our data analysis process as qualitative researchers. An audit trail is essentially creating a map of how you got from your raw data to your research findings. This means that we should be able to work backwards, starting with your research findings and trace them back to your raw data. It starts with labeling our data as we begin to break it apart (deconstruction) and then reassemble it (reconstruction). It allows us to determine where ideas came from and how/why we put ideas together to form broader themes. An audit trail offers transparency in our data analysis process. It is the opposite of the “black box” we spoke about in our qualitative analysis chapter, making it clear how we got from point A to point B.

External audit

An external audit is when we actually bring in a qualitative researcher not connected to our project once the study has been completed to examine the research project and the findings to “evaluate the accuracy and evaluate whether or not the findings, interpretations and conclusions are supported by the data” (Robert Wood Johnson Foundation, External Audits). An external auditor will likely look at all of our research materials, but will likely make extensive use of our audit trail to ensure that a clear link can be established between our findings and the raw data we collected by an external observer. Much like a peer debriefer, an external auditor can offer an outside critique of the study, thereby helping us to reflect on the work we are doing and how we are going about it.

Negative case analysis

Negative case analysis involves including data that contrasts, contradicts, or challenges the majority of evidence that we have found or expect to find. This may come into play in our sampling, meaning that we may seek to recruit or include a specific participant or group of participants because they represent a divergent opinion. Or, as we begin our analysis, we may identify a unique or contrasting idea or opinion that seems to contradict the majority of what our other data seem to be point to. In this case, we choose to intentionally analyze and work to understand this unique perspective in our data. As with a thick description, a negative case analysis is attempting to offer the most comprehensive and complete understanding of the phenomenon we are studying, including divergent or contradictory ideas that may be held about it.

Now let’s take some time to think through each of the stages of the design process and consider how we might apply some of these strategies. Again, these tools are to help us, as human instruments, better account for our role in the qualitative research process and also to enhance the trustworthiness of our research when we share it with others. It is unrealistic that you would apply all of these, but attention to some will indicate that you have been thoughtful in your design and concerned about the quality of your work and the confidence in your findings.

First let’s discuss sampling. We have already discussed that qualitative research generally relies on non-probability sampling and have reviewed some specific non-probability strategies you might use. However, along with selecting a strategy, you might also include a couple of the rigor-related tools discussed above. First, you might choose to employ data triangulation. For instance, maybe you are conducting an ethnography studying the culture of a peer-support clubhouse. As you are designing your study, along with extensive observations you plan to make in the clubhouse, you are also going to conduct interviews with staff, board members, and focus groups with members. In this way you are combining different types of data (i.e. observations, focus groups, interviews) and perspectives (i.e. yourself as the researcher, members, staff, board). In addition, you might also consider using negative case analysis. At the planning stage, this could involve you intentionally sampling a case or set of cases that are likely to provide an alternative view or perspective compared to what you might expect to find. Finally, specifically articulating your sampling rationale can also enhance the rigor of your research (Barusch, Gringeri, & George, 2011).[3] While this isn’t listed in our tools table, it is generally a good practice when reporting your research (qualitative or quantitative) to outline your sampling strategy with a brief rationale for the choices you made. This helps to improve the transparency of your study.

Next, we can progress to data gathering. The main rigor-related tool that directly applies to this stage of your design is likely prolonged engagement. Here we build in or plan to spend extensive time with participants gathering data. This might mean that we return for repeated interviews with the same participants or that we go back numerous times to make observations and take field notes. While this can take many forms, the overarching idea here is that you build in time to immerse yourself in the context and culture that you are studying. Again, there is no short-cut here, it demands time in the field getting to know people, places, significance, history, etc. You need to appreciate the context and the culture of the situation you are studying. Something special to consider here is insider/outsider status. If you would consider yourself an “outsider”, that is to say someone who does not belong to the same group or community of people you are studying, it may be quite obvious that you will need to spend time getting to know this group and take considerable time observing and reflecting on the significance of what you see. However, if you are a researcher who is a member of the particular community you are studying, or an “insider”, I would suggest that you still need to work to objectively to take a step back, make observations, and try to reflect on what you see, what you thought you knew, and what you come to know about the community you belong to. In both cases, prolonged engagement requires good self-reflection and observation skills.

A number of these tools may be applied during the data analysis process. First, if you have a research team, you might use observer triangulation, although this might not be an option as a student unless you are building a proposal as a group. As explained above, observer triangulation means that more than one of you will be examining the data that has been collected and drawing results from it. You will then compare these results and ultimately converge on your findings.

Example. I’m currently using the following strategy on a project where we are analyzing focus group data that was collected over a number of focus groups. We have a team of four researchers and our process involves:

- reviewing our initial focus group transcripts

- individually identifying important categories that were present

- collectively processing these together and identifying specific labels we would use for a second round of coding

- individually returning to the transcripts with our codes and coding all the transcripts

- collectively meeting again to discuss what subthemes fell under each of the codes and if the codes fit or needed to be changed/merged/expanded

While the process was complex, I do believe this triangulation of observers enriched our analysis process. It helped us to gain a clearer understanding of our results as we collectively discussed and debated what each theme meant based on our individual understandings of the data.

While we did discuss negative case analysis above in the sampling phase, it is also worth mentioning here. Contradictory findings may creep up during our analysis. One of our participants may share something or we may find something in a document that seemingly is at odds with the majority of the rest of our data. Rather than ignoring this, negative case analysis would seek to understand this perspective and what might be behind this contradiction. In addition, we may choose to construct an audit trail as we move from raw data to our research findings during our data analysis. This means that we will institute a strategy for tracking our analysis process. I imagine that most researchers develop their own variation on this tracking process, but at its core, you need to find a way to label your segments of data so that you know where they came from once you start to break them up. Furthermore, you will be making decisions about what groups of data belong together and what they mean. Your tracking process for your audit trail will also have to provide a way to document how you arrived at these decisions. Often towards the end of an analysis process, researchers may choose to employ member checking (although you may also implement this throughout your analysis). In the example above where I was discussing our focus group project, we plan to take our findings back to some of our focus group participants to see if they feel that we captured the important information based on what they shared with us. As discussed in sampling, it is also a good practice to make sure to articulate your qualitative analysis process clearly. Unfortunately, I’ve read a number of qualitative studies where the researchers provide little detail regarding what their analysis looked like and how they arrived at their results. This often leaves me with questions about the quality of what was done.

Now we need to share our research with others. The most relevant tool specific to this phase is providing a thick description of our results. As indicated in the table, a thick description means that we offer our audience a very detailed, rich narrative in helping them to interpret and make sense of our results. Remember, the main aim of qualitative research is not necessarily to produce results that generalize to a large group of people. Rather, we are seeking to enhance understanding about a particular experience, issue, or phenomenon by studying it very extensively for a relatively small sample. This produces a deep, as opposed to, a broad understanding. A thick description can be very helpful by offering detailed information about the sample, the context in which the study takes place, and a thorough explanation of findings and often how they relate to each other. As a consumer of research, a thick description can help us to make our own judgments about the implications of these results and what other situations or populations these findings might apply to.

You may have noticed that a few of the tools in our table haven’t yet been discussed in the qualitative process yet. This is because some of these rigor-related tools are meant to span the researcher process. To begin with, reflexivity is a tool that best applied through qualitative research. I encourage students in my social work practice classes to find ways to build reflexivity into their professional lives as a way of improving their professional skills. This is no less true of qualitative research students. Throughout our research process, we need to consider how our use-of-self is shaping the decisions we are making and how the research may be transforming us during the process. What led you to choose your research question? Why did you group those ideas together? What caused you to label your theme that? What words do you use to talk about your study at a conference? The qualitative researcher has much influence throughout this process, and self-examination of that influence can be an important piece of rigor. As an example, one step that I sometimes build into qualitative projects is reflexively journaling before and after interviews. I’m often driving to these interviews, so I’ll turn my Bluetooth on in the car and capture my thoughts before and after, transcribing them later. This helps me to check-in with myself during data collection and can help me illuminate insights I might otherwise miss. I have also found this to be helpful to use in my peer debriefing. Peer debriefing can be used throughout the research process. Meeting with a peer debriefer throughout the research process can be a good way to consistently reflect on your progress and the decisions you are making throughout a project. A peer debriefer can make connections that we may otherwise miss and question aspects of our project that may be important for us to explore. As I mentioned, combining reflexivity with peer debriefing can be a powerful tool for processing our self-reflection in connection with the progress of our project.

Finally, the use of an external audit really doesn’t come into play until the end of the research process, but an external auditor will look extensively at the whole research process. Again, this is a researcher who is unattached to the project and seeking to follow the path of the project in hopes of providing an external perspective on the trustworthiness of the research process and its findings. Often, these auditors will begin at the end, starting with the findings, and attempt to trace backwards to the beginning of the project. This is often quite a laborious task and some qualitative scholars debate whether the attention to objectivity in this strategy may be at odds with the aims of qualitative research in illuminating the uniquely subjective experiences of participants by inherently subjective researchers. However, it can be a powerful tool for demonstrating that a systematic approach was used.

As you are thinking about designing your qualitative research proposal, consider how you might use some of these tools to strengthen the quality of your proposed research. Again, you might be using these throughout the entire research process, or applying them more specifically to one stage of the process (e.g. data collection, data analysis). In addition, as you are reviewing qualitative studies to include in your literature review or just in developing your understanding of the topic, make sure to look out for some of these tools being used. They are general indicators that we can use to assess the attention and care that was given to using a scientific approach to producing the knowledge that is being shared.

Key Takeaways

- As qualitative researchers there are a number of tools at your disposal to help support quality and rigor. These tools can aid you in assessing the quality of others’ work and in supporting the quality of your own design.

- Qualitative rigor is not a box we can tick complete somewhere along our research project’s timeline. It is something that needs to be attended to thoughtfully throughout the research process; it is a commitment we make to our participants and to our potential audience.

Exercises

List out 2-3 tools that seem like they would be a good fit for supporting the rigor of your qualitative proposal. Also, provide a justification as to why they seem relevant to the design of your research and what you are trying to accomplish.

- Tool:

- Justification:

- Tool:

- Justification:

- Tool:

- Justification:

- Lincoln, Y. S., & Guba, E. G. (1985). Naturalistic inquiry. Newberry Park, CA: Sage ↵

- Lincoln, YS. & Guba, EG. (1985). Naturalistic inquiry. Newbury Park, CA: Sage Publications. ↵

- Barusch, A., Gringeri, C., & George, M. (2011). Rigor in qualitative social work research: A review of strategies used in published articles. Social Work Research, 35(1), 11-19. ↵

Rigor is the process through which we demonstrate, to the best of our ability, that our research is empirically sound and reflects a scientific approach to knowledge building.

The ability of a measurement tool to measure a phenomenon the same way, time after time. Note: Reliability does not imply validity.

The extent to which the scores from a measure represent the variable they are intended to.

a paradigm guided by the principles of objectivity, knowability, and deductive logic

Findings form a research study that apply to larger group of people (beyond the sample). Producing generalizable findings requires starting with a representative sample.

a paradigm based on the idea that social context and interaction frame our realities

in a literature review, a source that describes primary data collected and analyzed by the author, rather than only reviewing what other researchers have found

Data someone else has collected that you have permission to use in your research.

unprocessed data that researchers can analyze using quantitative and qualitative methods (e.g., responses to a survey or interview transcripts)

Trustworthiness is a quality reflected by qualitative research that is conducted in a credible way; a way that should produce confidence in its findings.

Ability to say that one variable "causes" something to happen to another variable. Very important to assess when thinking about studies that examine causation such as experimental or quasi-experimental designs.

The level of confidence that research is obtained through a systematic and scientific process and that findings can be clearly connected to the data they are based on (and not some fabrication or falsification of that data).

The ability to apply research findings beyond the study sample to some broader population,

This is a synonymous term for generalizability - the ability to apply the findings of a study beyond the sample to a broader population.

The potential for qualitative research findings to be applicable to other situations or with other people outside of the research study itself.

Consistency is the idea that we use a systematic (and potentially repeatable) process when conducting our research.

a single truth, observed without bias, that is universally applicable

one truth among many, bound within a social and cultural context

The idea that qualitative researchers attempt to limit or at the very least account for their own biases, motivations, interests and opinions during the research process.

A process through which the researcher explains the research process, procedures, risks and benefits to a potential participant, usually through a written document, which the participant than signs, as evidence of their agreement to participate.

Data that accurately portrays information that was shared in or by the original source.

The idea that researchers are responsible for conducting research that is ethical, honest, and following accepted research practices.

The process of research is record and described in such a way that the steps the researcher took throughout the research process are clear.

A general approach to research that is conscientious of the dynamics of power and control created by the act of research and attempts to actively address these dynamics through the process and outcomes of research.

A research journal that helps the researcher to reflect on and consider their thoughts and reactions to the research process and how it may be shaping the study

Notes that are taken by the researcher while we are in the field, gathering data.

An iterative approach means that after planning and once we begin collecting data, we begin analyzing as data as it is coming in. This early analysis of our (incomplete) data, then impacts our planning, ongoing data gathering and future analysis as it progresses.

discrete segments of data

Memoing is the act of recording your thoughts, reactions, quandaries as you are reviewing the data you are gathering.

An audit trail is a system of documenting in qualitative research analysis that allows you to link your final results with your original raw data. Using an audit trail, an independent researcher should be able to start with your results and trace the research process backwards to the raw data. This helps to strengthen the trustworthiness of the research.

Having an objective person, someone not connected to your study, try to start with your findings and trace them back to your raw data using your audit trail. A tool to help demonstrate rigor in qualitative research.

Context is the circumstances surrounding an artifact, event, or experience.

A code is a label that we place on segment of data that seems to represent the main idea of that segment.

Part of the qualitative data analysis process where we begin to interpret and assign meaning to the data.

A qualitative research tool for enhancing rigor by partnering with a peer researcher who is not connected with your project (therefore more objective), to discuss project details, your decision, perhaps your reflexive journal, as a means of helping to reduce researcher bias and maintain consistency and transparency in the research process.

including more than one member of your research team to aid in analyzing the data

Including data from multiple sources to help enhance your understanding of a topic

Member checking involves taking your results back to participants to see if we "got it right" in our analysis. While our findings bring together many different peoples' data into one set of findings, participants should still be able to recognize their input and feel like their ideas and experiences have been captured adequately.

A thick description is a very complete, detailed, and illustrative of the subject that is being described.

How we understand and account for our influence, as researchers, on the research process.

As researchers, this means we are extensively spending time with participants or are in the community we are studying.

Including data that contrasts, contradicts, or challenges the majority of evidence that we have found or expect to find

sampling approaches for which a person’s likelihood of being selected for membership in the sample is unknown

Ethnography is a qualitative research design that is used when we are attempting to learn about a culture by observing people in their natural environment.